Failover architecture

ShareAspace supports failover of the server component (ShareAspace host). This means that were one ShareAspace host to go down, this can be detected and a second host can be started, picking up where the first host were at.

Setup requirements

To take advantage of the failover functionality a minimum of two ShareAspace host nodes are required. One primary node and one secondary node. It is however possible to have any number of secondary nodes.

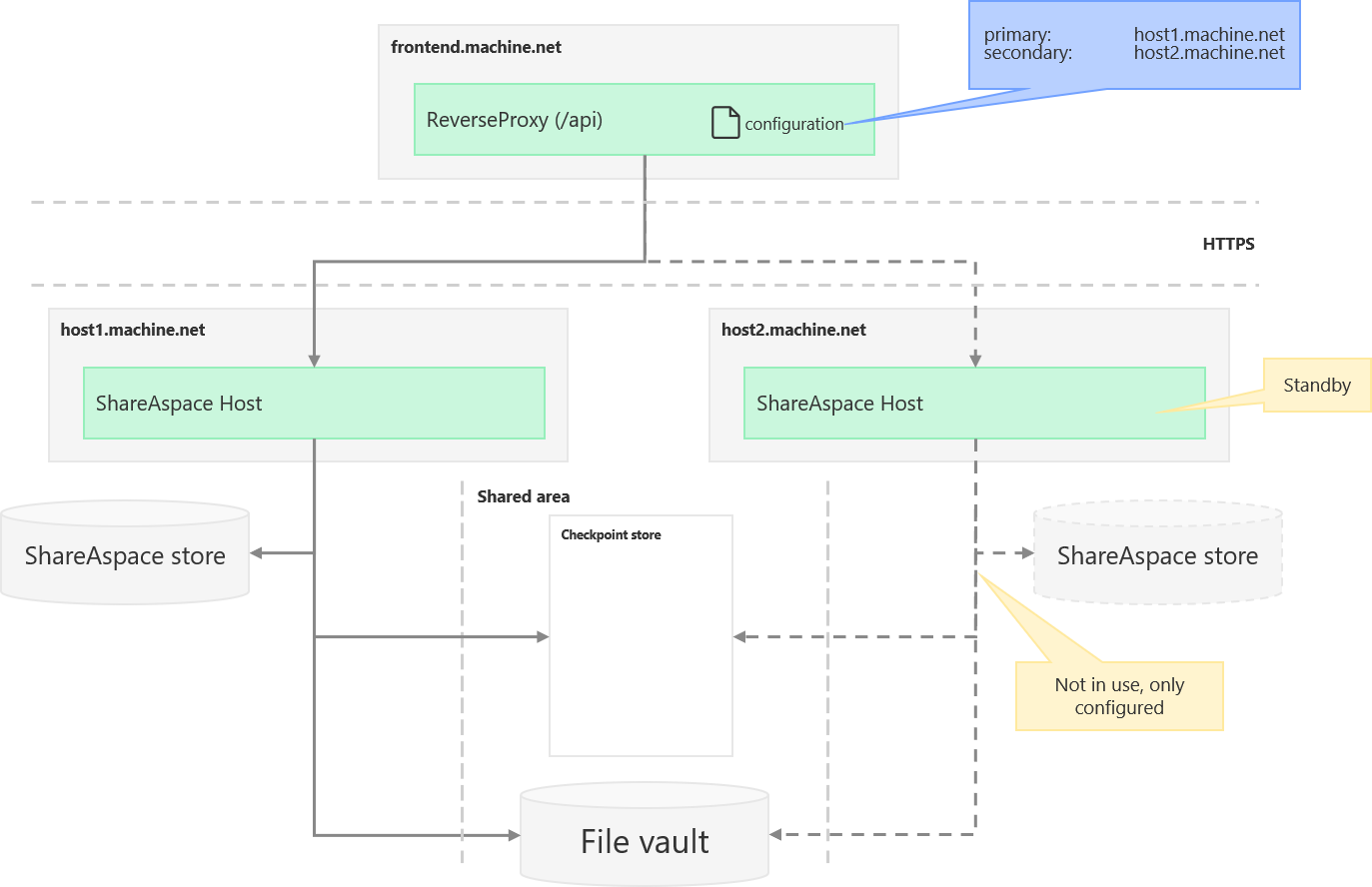

Initial setup

With the initial setup, one ShareAspace host is setup as primary and at least one node is setup as secondary.

The primary node is setup as a normal ShareAspace host would be setup.

The secondary node is setup in a "restore mode".

The ShareAspace reverse proxy component is responsible for orchestrating the failover process. It will have all nodes registered, i.e. the primary node and all secondary nodes.

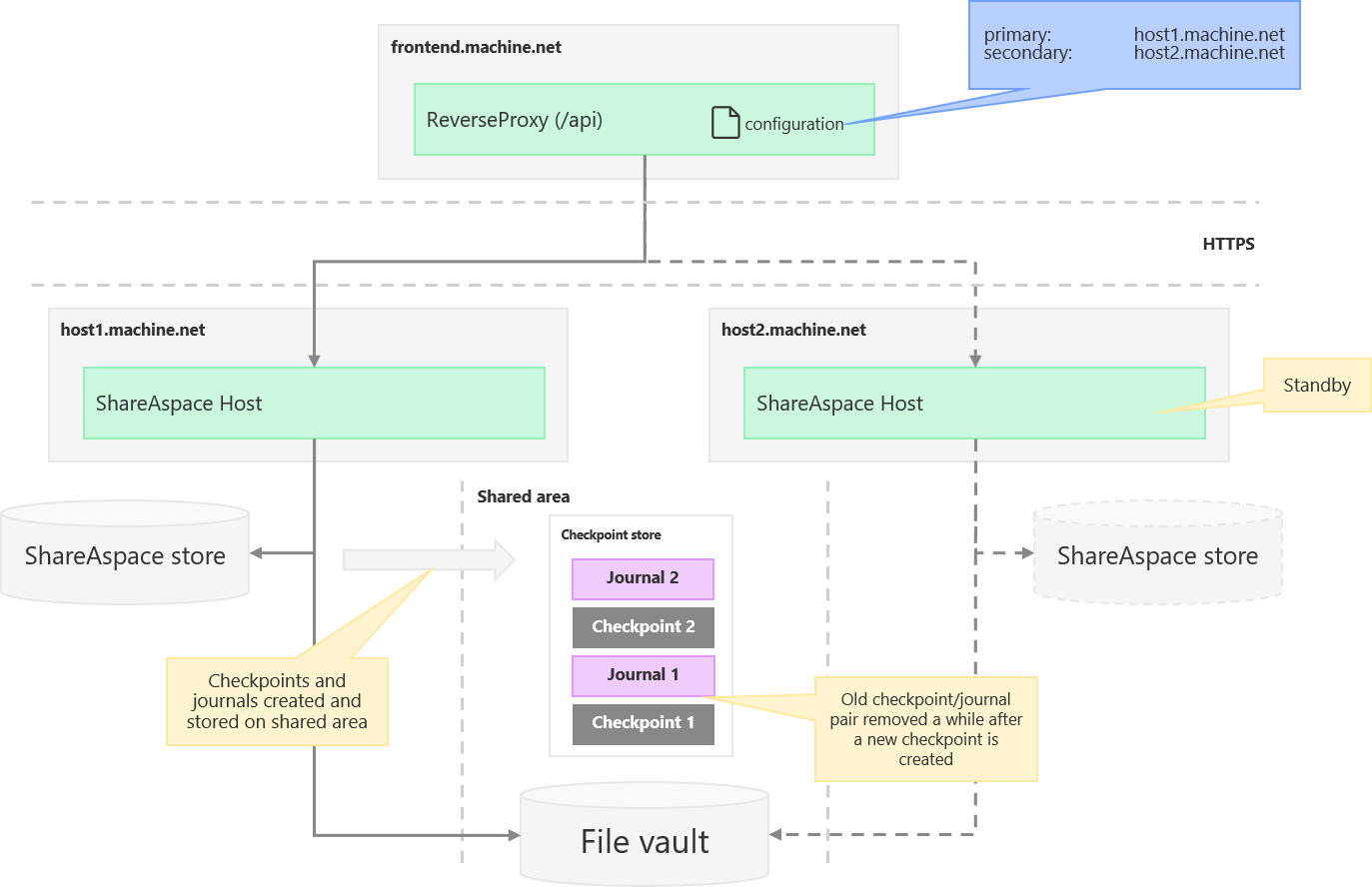

Primary node operational

- While the primary node is operational, all traffic onto the reverse proxy will be routed to the primary node.

- The primary node has its own storage for managing databases and indexes. This store is deployed with the node and supports the IOPS requirements that any ShareAspace host requires.

- At given system events (like a space is created or updated) and at a given threshold interval the primary node will produce a checkpoint file that is put on a shared area (a specific folder within the ShareAspace file vault).

- Between checkpoints the primary node will keep a journal file on the shared area. All commits done to the data store of that node will be kept in this journal. The journal is stored together with the latest checkpoint.

- The primary node will clean up old checkpoints and old journals once it is safe to do so.

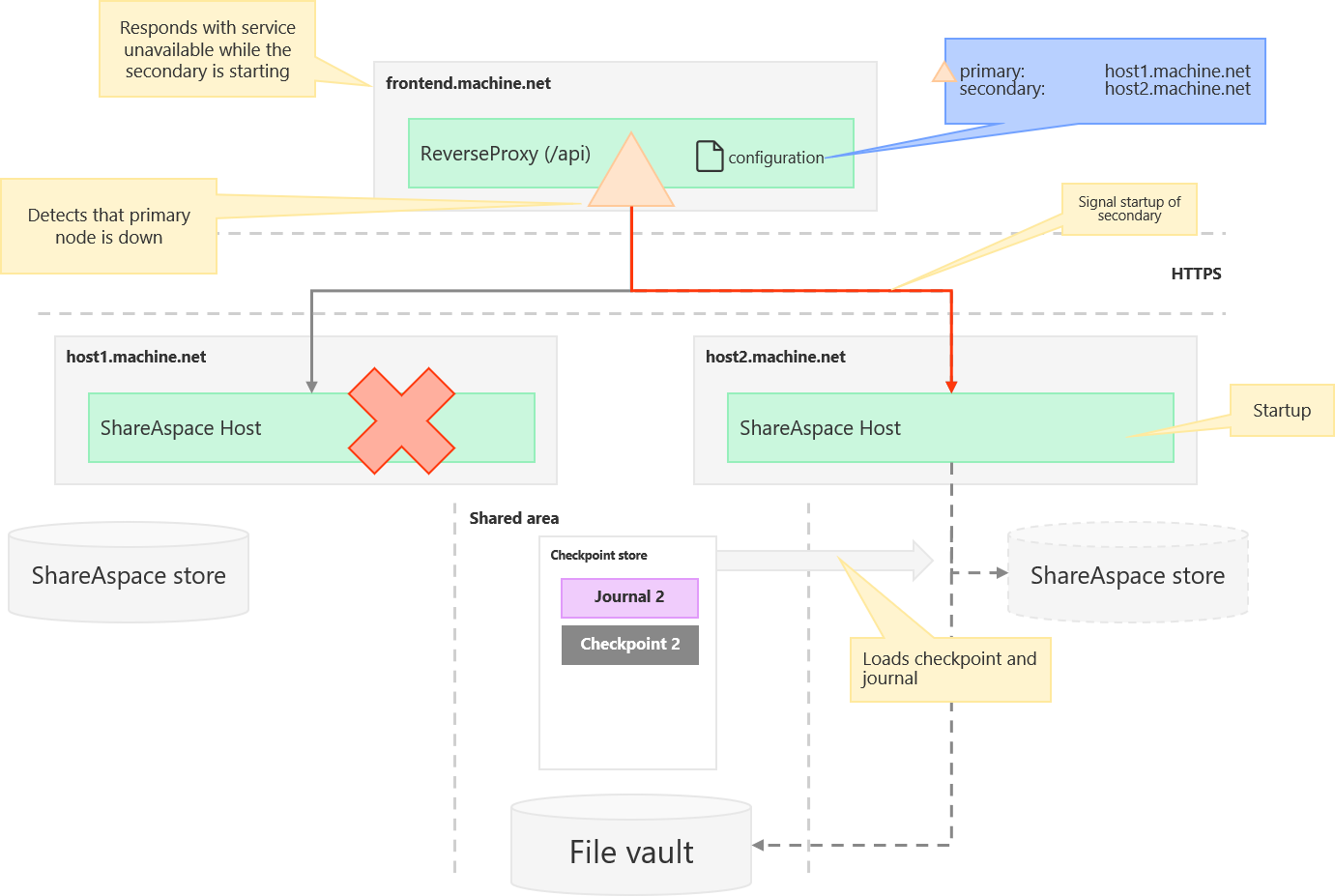

Health checks and failover

- On a configurable interval the reverse proxy will check the health of the primary node.

- If the reverse proxy detects that the primary node is no longer operational it will start the process of setting up one of the secondary nodes as the new primary node.

- The reverse proxy will send a startup signal to one of the secondary nodes.

- The secondary node, that has been on standby, will load in the latest checkpoint and the latest journal from the shared file area.

- Once the old secondary node has loaded the data it becomes the new primary node. The reverse proxy will update its configuration. The old primary node will be removed and the old secondary node will now be the new primary node.

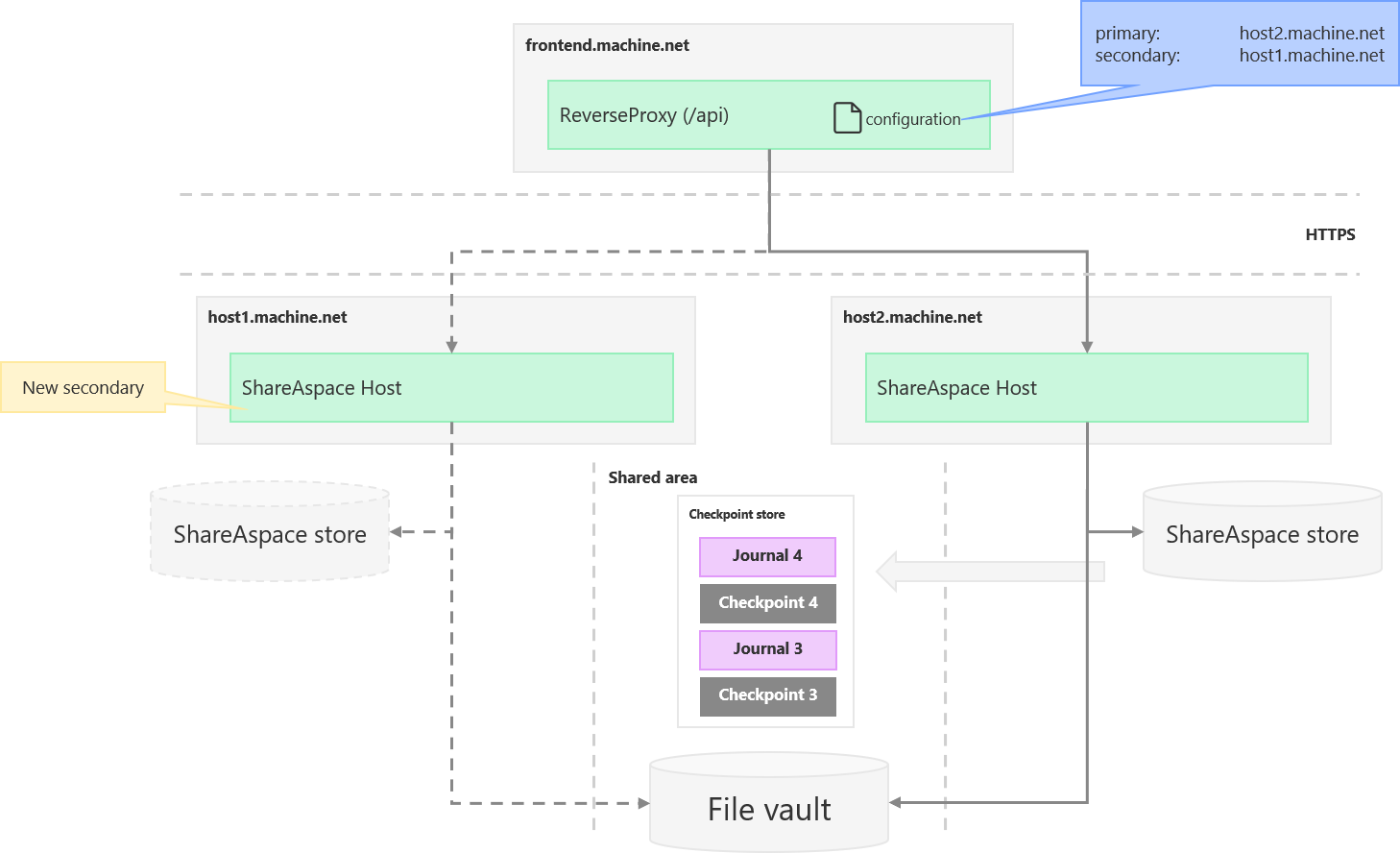

Setup of new secondary

- The node that failed can be reconfigured as a secondary node. This is a manual process, as in there is no automated processes for it. It can however be scripted.

- The node that failed must be bootstrapped and put in the "restore mode". Once in the "restore mode" the configuration of the reverse proxy must be updated. i.e. the node is added as a secondary node registration in the configuration file of the reverse proxy.